Benchmarking & Usability

The first time I conducted formal benchmarking was when I was marketing development tools. The product line built its reputation on how fast it could compile–and it was a great leverage point to de-position the competition. Within this group, we had three dedicated engineers that created test scenarios to constantly compare builds to existing and competitive products.

In some cases, our compile times could beat a competitor by 400% because of our attention to benchmarking. In other cases, we caught bugs and leaks in our products that were exposed during the benchmarking and evident when times dropped significantly. Our benchmarking efforts proved to be a valuable effort with a strong ROI.

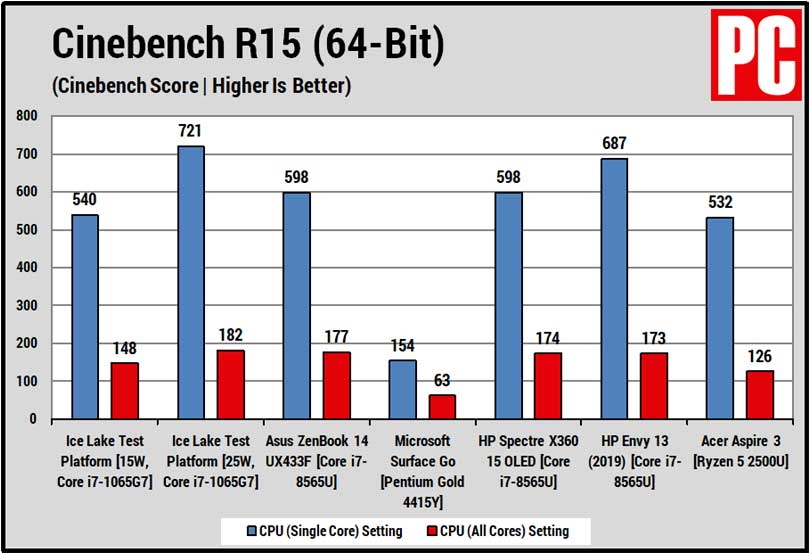

Other industries are also evaluated by their timing benchmarks, such as the semiconductor, graphics card, rendering, scanning, and printing (especially color printing) industries. Many products could similarly carve out a better competitive position if product management determined which benchmarks were important to their users and then ensured that the products ship with the required capability.

Other products could be benchmarked not on software or hardware operational speed, but during operator usage via a usability test. By counting keystrokes to project completion, the interface could be dramatically improved and empirically validated and leveraged when the product is positioned.

Areas to test may include:

- Installation time. This was important to one company I worked with that used a national reseller that was relatively nontechnical. A competitor had similar pricing, but their product could be installed in 1/4th the time (5 versus 20 hours). This made the competitor’s product cost less to install and less overall for the customer.

- Keystrokes/time per task. It is simple to compare keystrokes or mouse clicks per task between different applications. These can measure interface efficiency. However, in doing so you can also determine compliance with Windows/OS standards–in case a competitor has an efficient but nonstandard interface that you must position against. A perfect example would be Pinnacle Software’s editing package versus Adobe Premier or Sonic Foundry’s Vegas. Pinnacle’s software has some nice shortcuts, but it does not follow Windows conventions. As a result, it may be easier to use, but much more difficult to learn–a positioning leverage point against or for the software (depending on whether or not you work for Pinnacle or a competitor). If you know the interface advantages, you can those tasks that you do faster and de-emphasis the others (until you can fix them). Example of counting keystrokes (scroll down to the Usability section and click the graphic): ConvergeHub – Usability Testing or click HERE for the image.

- Time for final output. For a scanner, the final output is a scanned page, for a printer it is a printed page, for a compiler it is compiled code, for video editing or a 3D modeling package it is a rendering time, for a Web page it is load time. All of these can be measured and leveraged.

- Stability. Linux and Unix applications leverage average time before requiring a reboot against Windows. I leveraged how many faxes we could send before crashing (infinite) against Winfax (about 50) when I competed against Delrina. Vegas from Sonic Foundry can complete entire projects taking weeks without a single crash, versus Adobe Premier that is lucky to complete an hour without crashing.

- Accuracy. This is a common benchmark in OCR packages, in spell checkers and grammar programs. It also proved critical with Intel processors (with the rounding error), and with Lotus 123.

Most software and hardware can benefit from benchmarking. You will need to evaluate the critical variables within your category to determine the financial value you can derive from this exercise. Some categories like graphics cards live and die by benchmarking, others see a marginal benefit. The extent of value in your category will determine how many resources you can apply to this effort.